Use Cases

At the Augmented Enterprise Summit (AES 2025), Purnendu Mukherjee, founder and CEO of Convai, presented a compelling vision for the future of training and L&D: make it conversational, embodied, and agentic inside XR. This recap of the talk describes how AI-powered virtual humans are transforming learning and training.

Readers will find: a quick skills-spectrum overview (soft ↔ hard), a step-by-step product walkthrough of Convai’s creation-to-deployment flow, assessment and analytics, multi-modality delivery (text, voice, avatar call, full XR), security & deployment (including on-prem/air-gapped), and a slate of real-world demos across defense, manufacturing, enterprise, and space—featuring partners like Buendea.

Watch the full video of the presentation here:

Most AI systems, even cutting-edge LLMs, remain text-first. But humans don’t learn primarily through text. From infancy, we learn through spatial awareness—navigating and acting in a 3D world—then grounding language in that embodied experience.. XR (extended reality) and high-fidelity 3D simulations create the best conditions to give AI a similar embodied self— an agent that can perceive the scene, reason about objects and people, and act appropriately.

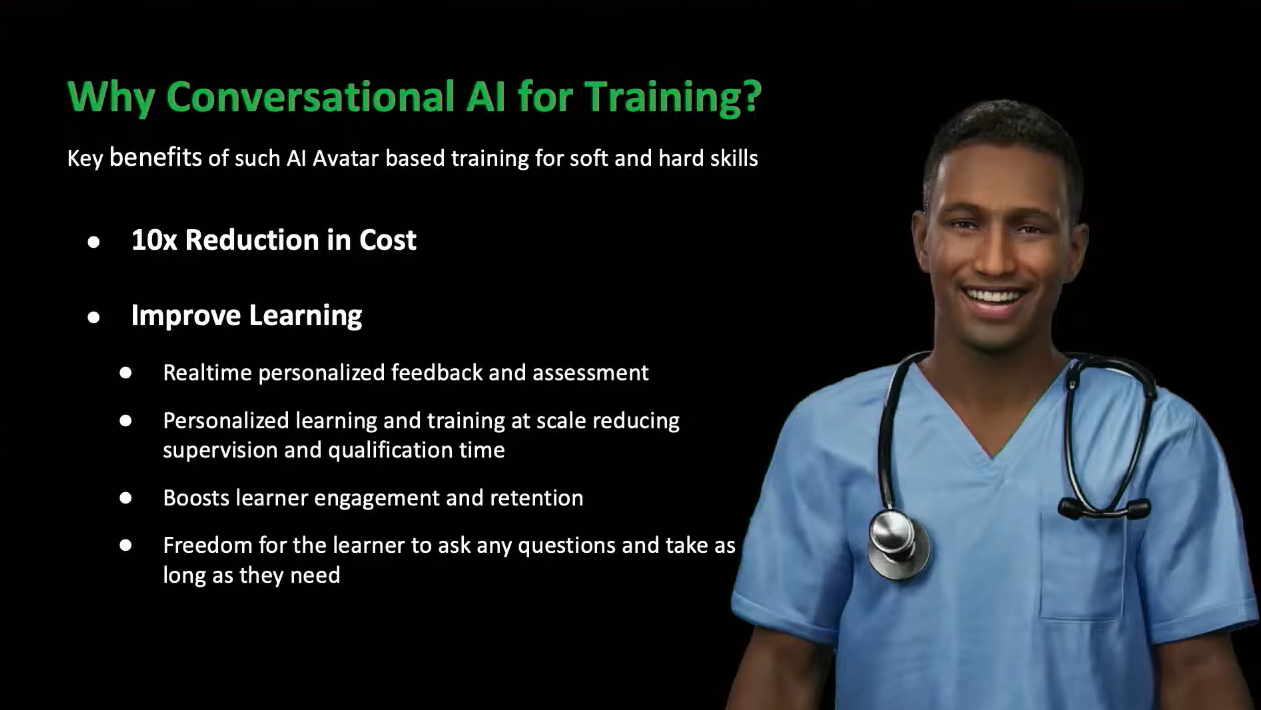

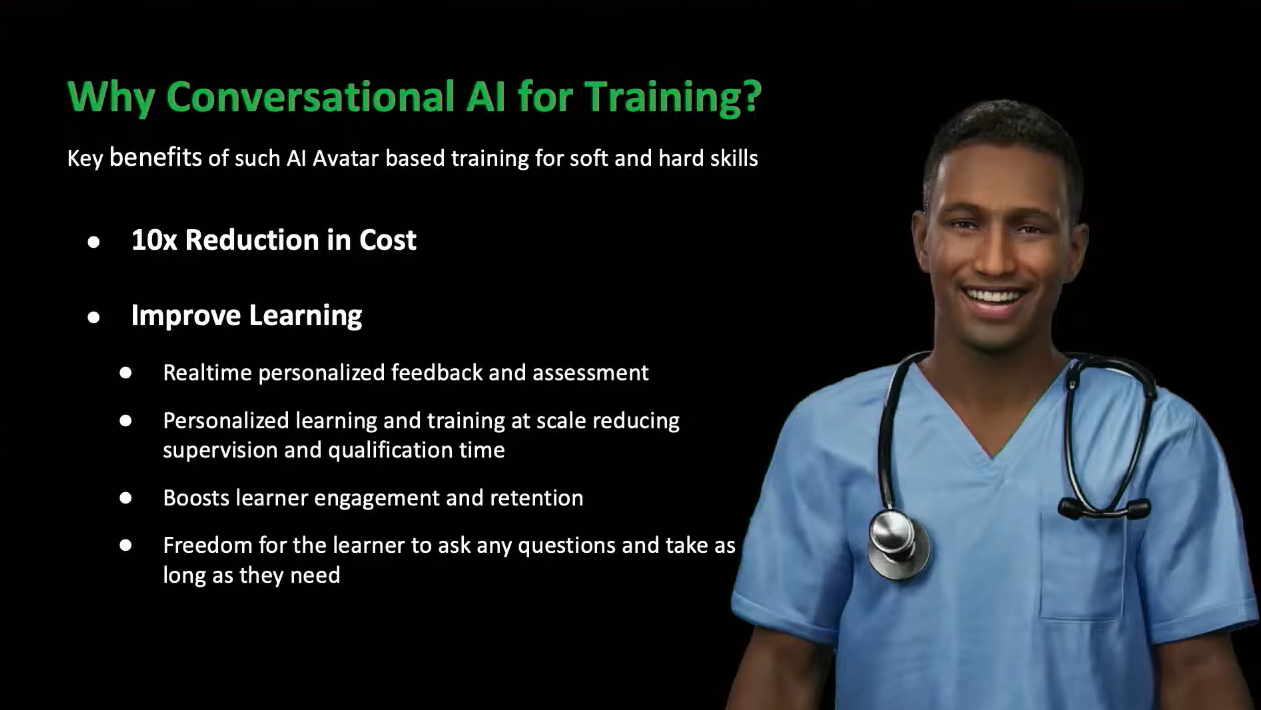

For enterprise L&D teams, that shift from “text on screens” to spatially-grounded, conversational practice is what drives measurable training outcomes: better retention, higher engagement, and faster time-to-competency.

In 2017, before “embodied AI” became a mainstream term, Purnendu argued that 3D spatial awareness would be the next big wave in AI. His thesis work and an accompanying blog explored how perception and action in space could anchor more human-like intelligence.

He then joined NVIDIA, contributing to large-scale training and inference (including early BERT work) and collaborating with Jensen Huang’s team on high-visibility demos. In 2020, NVIDIA shipped its first 3D avatar chatbot, showcasing real-time dialogue in a virtual environment.

Building on that momentum, Convai was founded to place conversational and agentic AI inside virtual worlds: giving avatars a mind (backstory, personality, memory, knowledge) and the capacity to perceive, converse, and move. An early open-ended ramen shop demo went viral, hinting at what soft-skills role-play in XR could feel like when it’s not scripted but truly live and responsive.

When Convai opened up as a developer platform for spatial computing, organizations tried it across categories—gaming, brand experiences, digital twins—but training quickly dominated. Over the last year, Convai leaned into enterprise L&D and XR training, where role-play with AI-powered avatars and procedural assistance deliver immediate value.

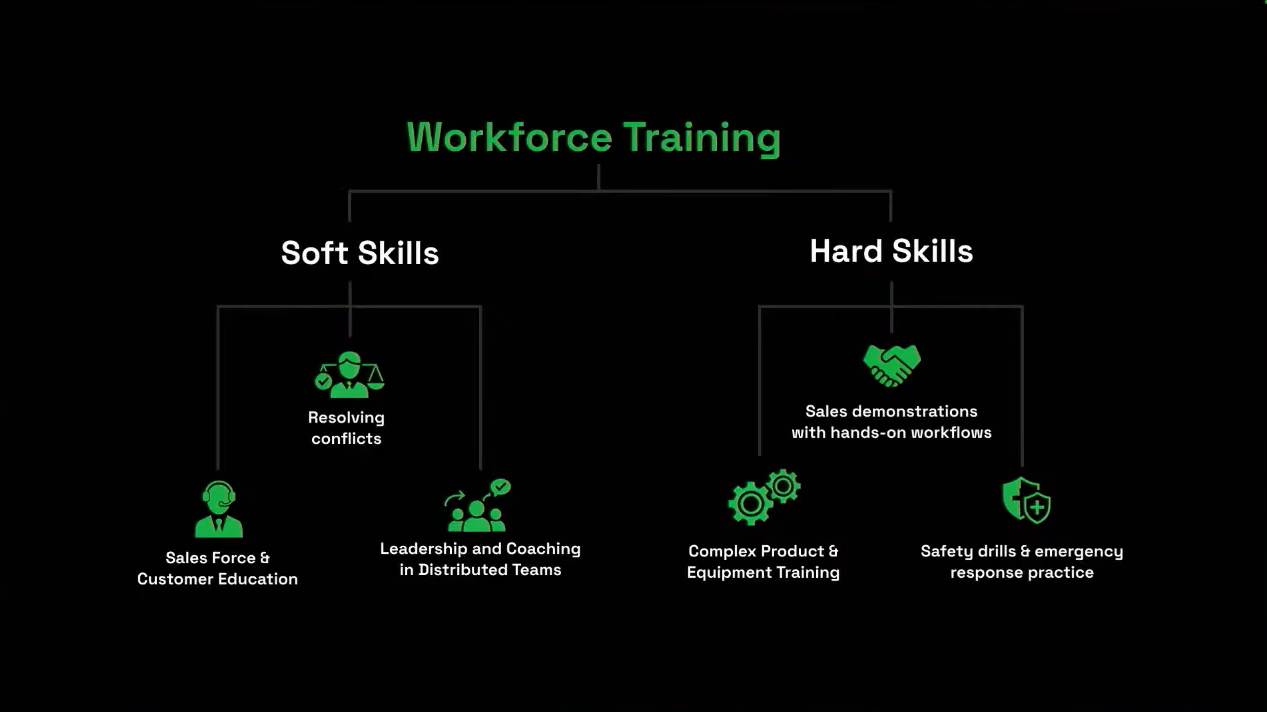

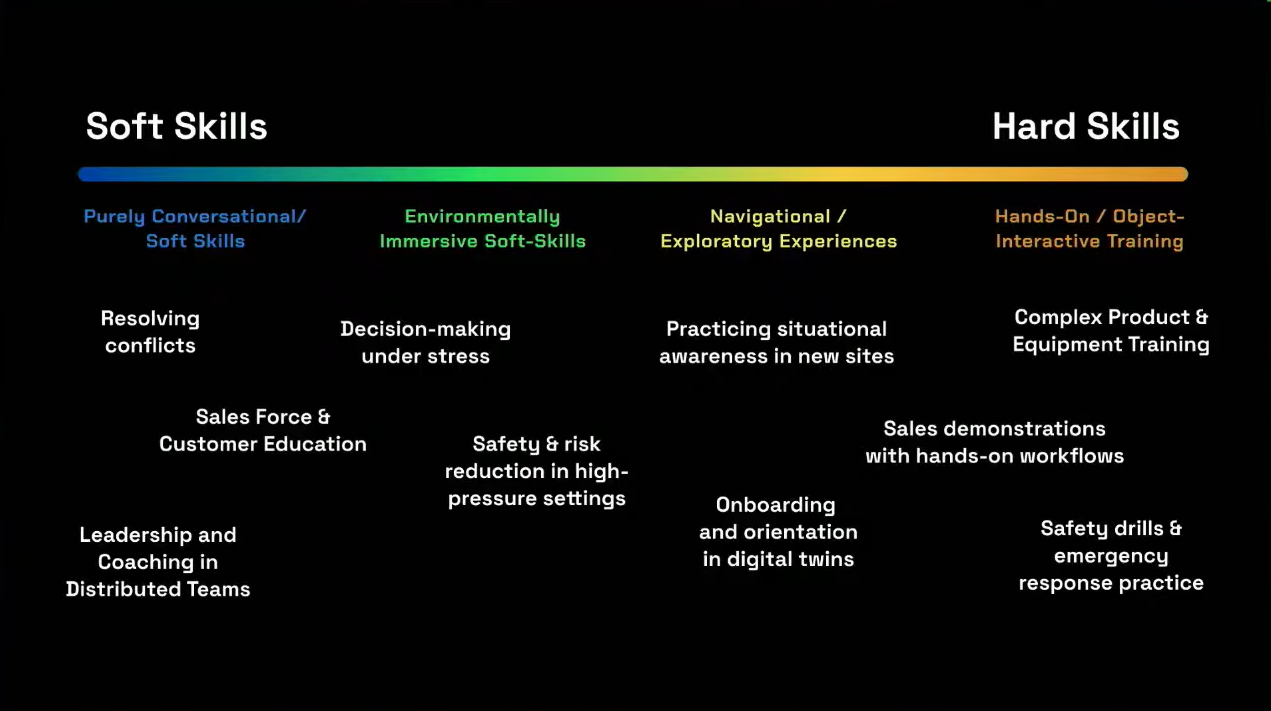

In L&D, real deployments rarely fit into neat “soft vs hard skills” boxes. Instead, scenarios sit along a spectrum where the role of environment, stress, navigation, and object interaction varies.

1) Pure conversation (soft skills)

2) Situational soft skills (context matters)

3) Navigation-dependent scenarios

4) Hands-on hard skills

Now that we have a better understanding of what Convai does for organizations, especially in accelerating learning and training efforts, let's take a look at how you can very effectively create your very own AI-powered virtual human with Convai.

Use Convai’s studio to define backstory and personality, connect documents & knowledge, choose voice/language and the LLM, and configure memory plus guardrails. The result is a consistent persona that knows your products, policies, and procedures and stays on brand.

Select from a large avatar library or import your custom character (e.g., XR trainer, brand ambassador). Visual identity matters for learner trust and recall.

Spin up a live video call session to validate knowledge grounding, tone, and scenario flow. Instructional designers can iterate quickly before pushing to 3D/Web/XR.

Run high-fidelity scenes from any device with a modern browser, no local GPUs needed! Trainees walk, look around, and talk to characters that can also navigate and interact—ideal for spatial computing programs and distributed teams.

Lack of animations can break immersion (“can you pour a drink?”). With XR Animation Capture, trainers record the motion once in-headset, save it, and the AI can re-enact it contextually. Over time, you build a reusable action library.

Import scanned environments (e.g., via Gaussian Splatting), place waypoints, and link the knowledge bank (manuals, PDFs, slide decks). The agent becomes a location-aware guide, answering contextual questions on the spot.

Convai integrates with Unreal Engine, Unity, Three.js, and NVIDIA Omniverse (extension available). It’s highly-rated across their marketplaces, and supports typical DevOps/content workflows enterprise teams expect.

Ship once, deliver everywhere:

The information above can help you create your very own AI powered virtual human, anytime, anywhere and easily. However, having created your AI character's mind, it is important to understand what type of agent helps your goal- embodied or disembodied agent.

A voice-first guide with on-screen prompts in immersive scenes which is great for procedural training, checklists, troubleshooting, and contextual Q&A without a visible avatar.

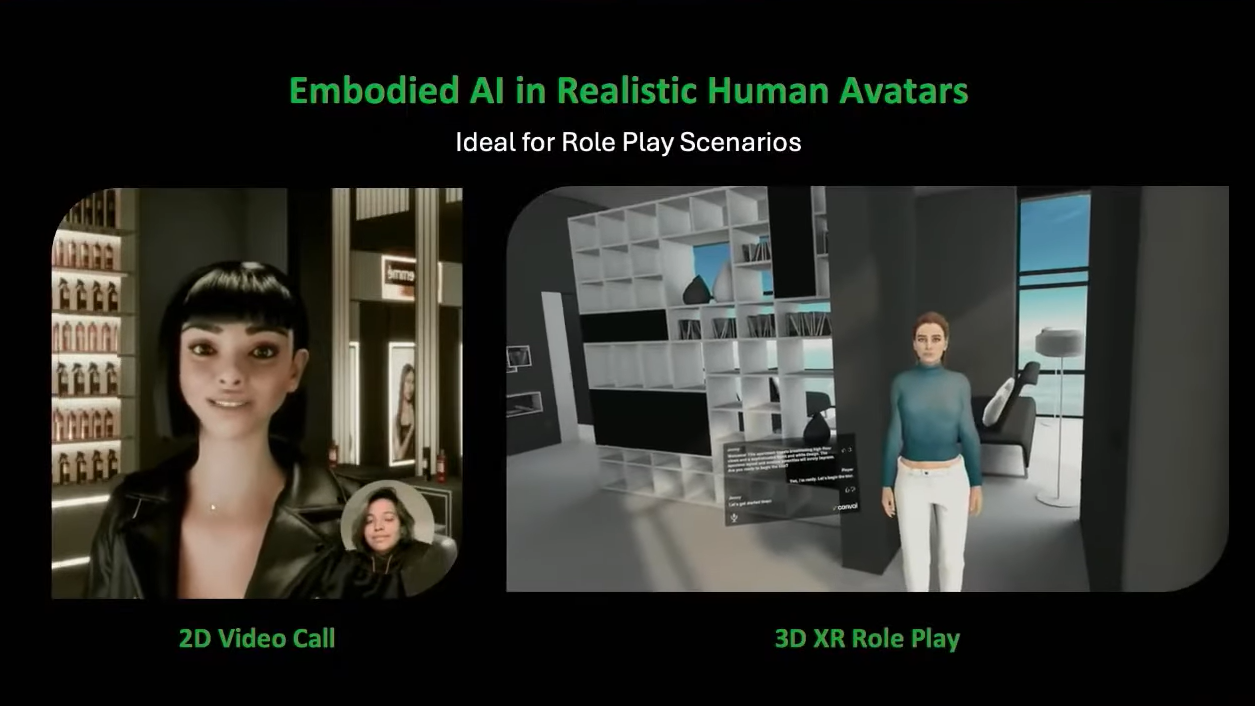

The most effective format for role-play. Learners practice eye contact, tone, pacing, and empathy with a present character, delivered via a 2D avatar call or fully in 3D/XR.

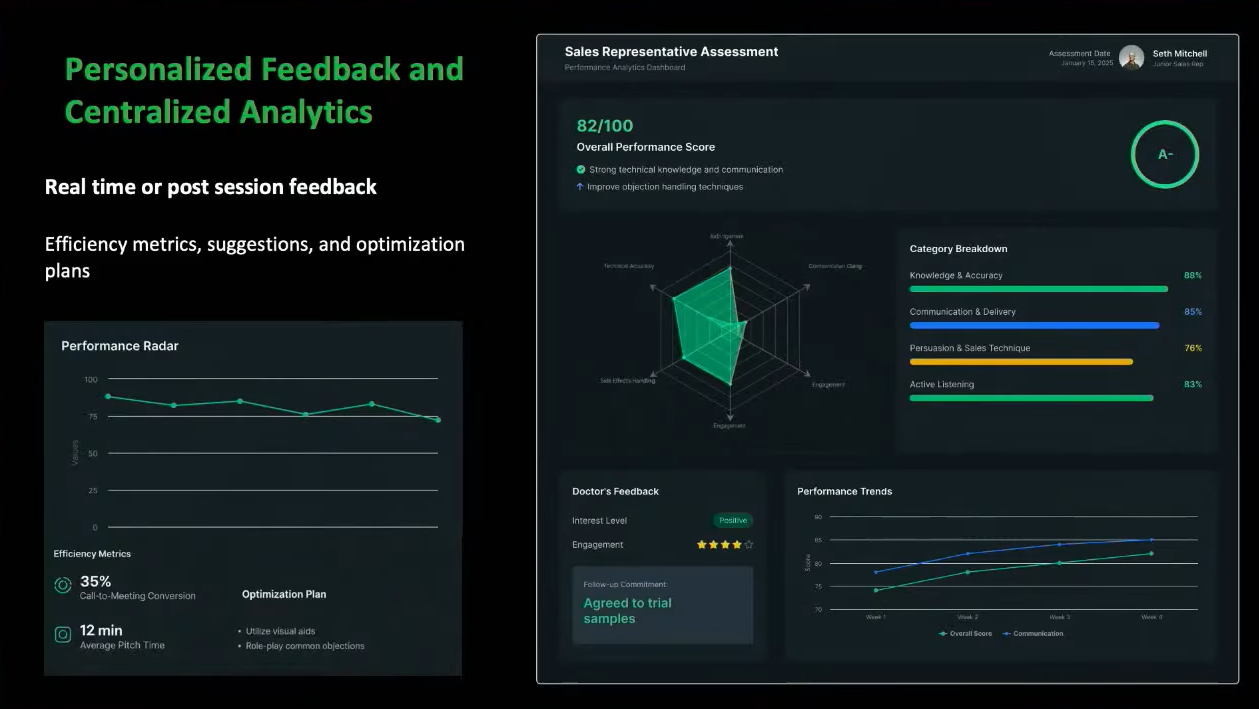

Define custom rubrics aligned to business outcomes. For example, knowledge accuracy, policy compliance, tone, empathy, delivery, procedural correctness, time to completion, and error recovery. Convai analyzes each session and generates individual and cohort-level reports for learners, managers, and instructional designers.

Typical outputs include:

Many enterprise deployments are private; these examples illustrate the range and fidelity organizations can achieve with XR training and AI-powered avatars.

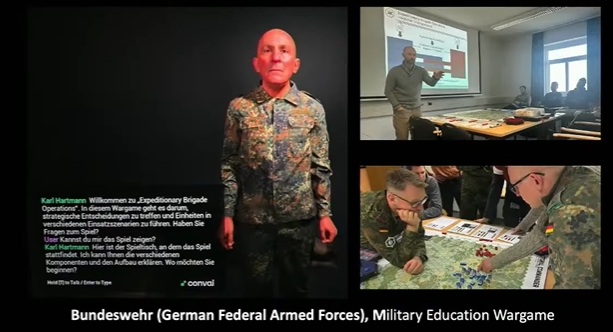

The German Federal Armed Forces use a digital twin of Colonel Carl Hartman to issue orders in a war-gaming pipeline. Trainees interact conversationally while the system ties into execution logic; bridging strategy with simulation.

A factory tour uses spatial waypoints and connected documentation to answer process questions in context. Great for safety walk-throughs, line changeovers, and new-hire onboarding.

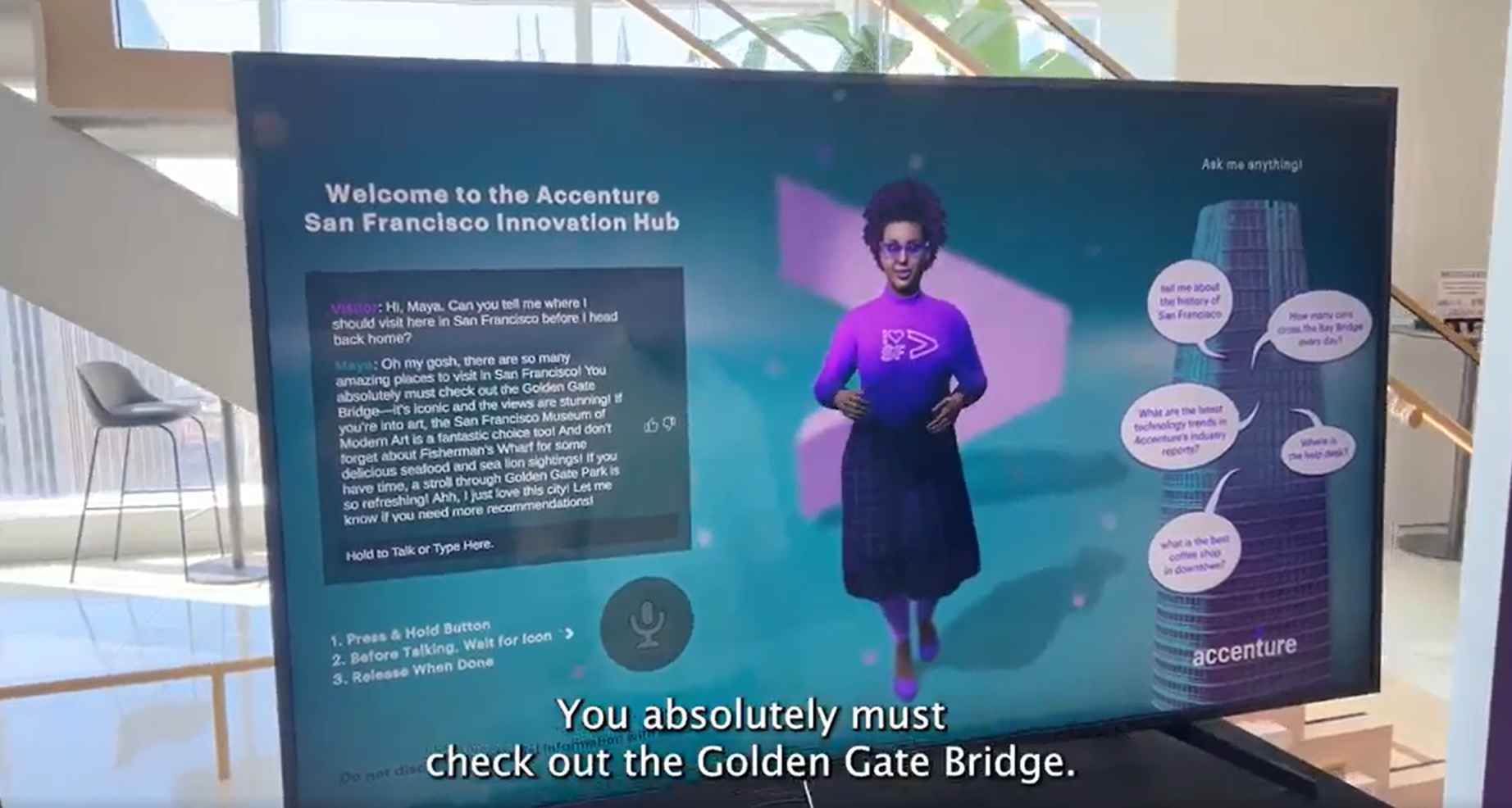

A 3D virtual assistant provides open-ended recommendations and contextual information for visitors, showing how enterprise support can be embedded inside a real environment.

High-fidelity lunar simulation that combines embodied and disembodied agents. The AI gives agentic step guidance (“follow the highlighted path,” “open the power station”), performs multimodal perception (the agent sees what the trainee sees), and answers contextual questions (e.g., rock identification). Scenarios support both AI crew and human multiplayer.

While cloud is available, Convai specializes in on-premise, air-gapped deployments which is crucial for defense, aerospace, manufacturing, and other sensitive domains. Much of the stack is source-available, enabling enterprise teams to adapt, extend, and integrate Convai into existing pipelines, identity providers, Unreal Engine/Unity content, and NVIDIA Omniverse workflows.